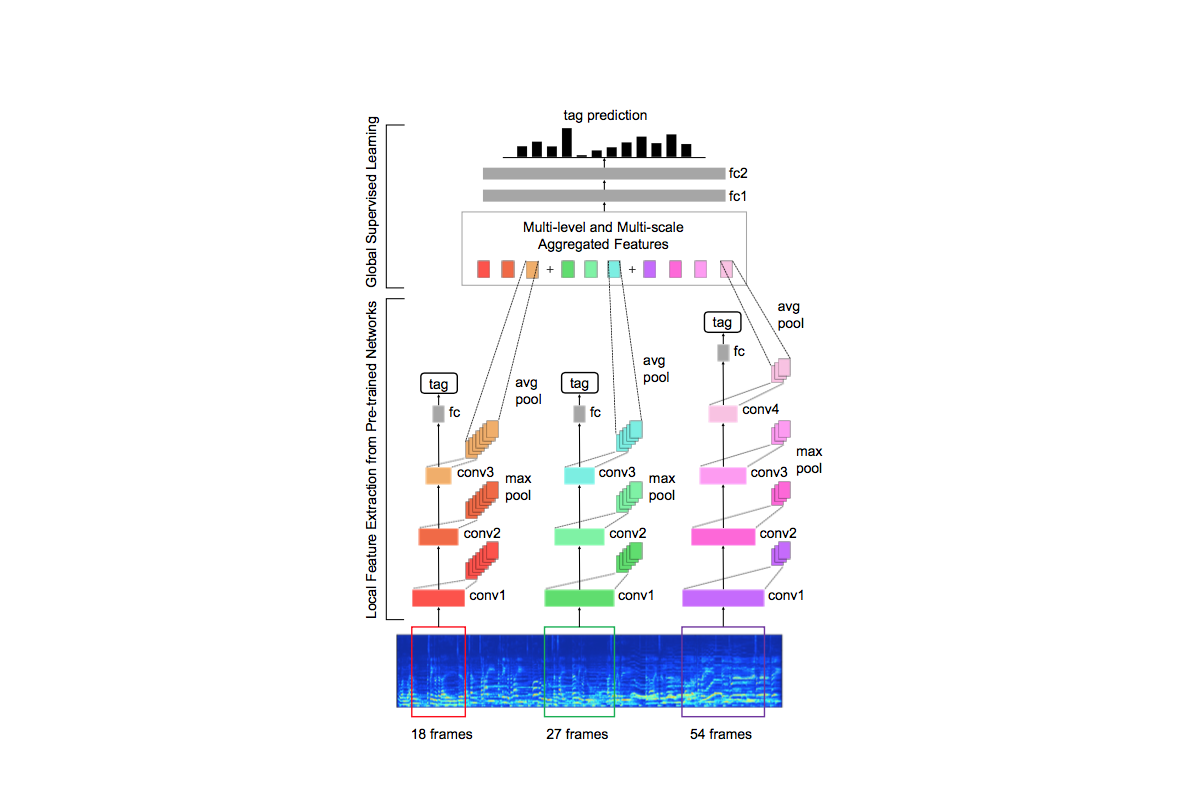

Multi-Level and Multi-Scale Feature Aggregation Using Pre-trained Convolutional Neural Networks for Music Auto-tagging

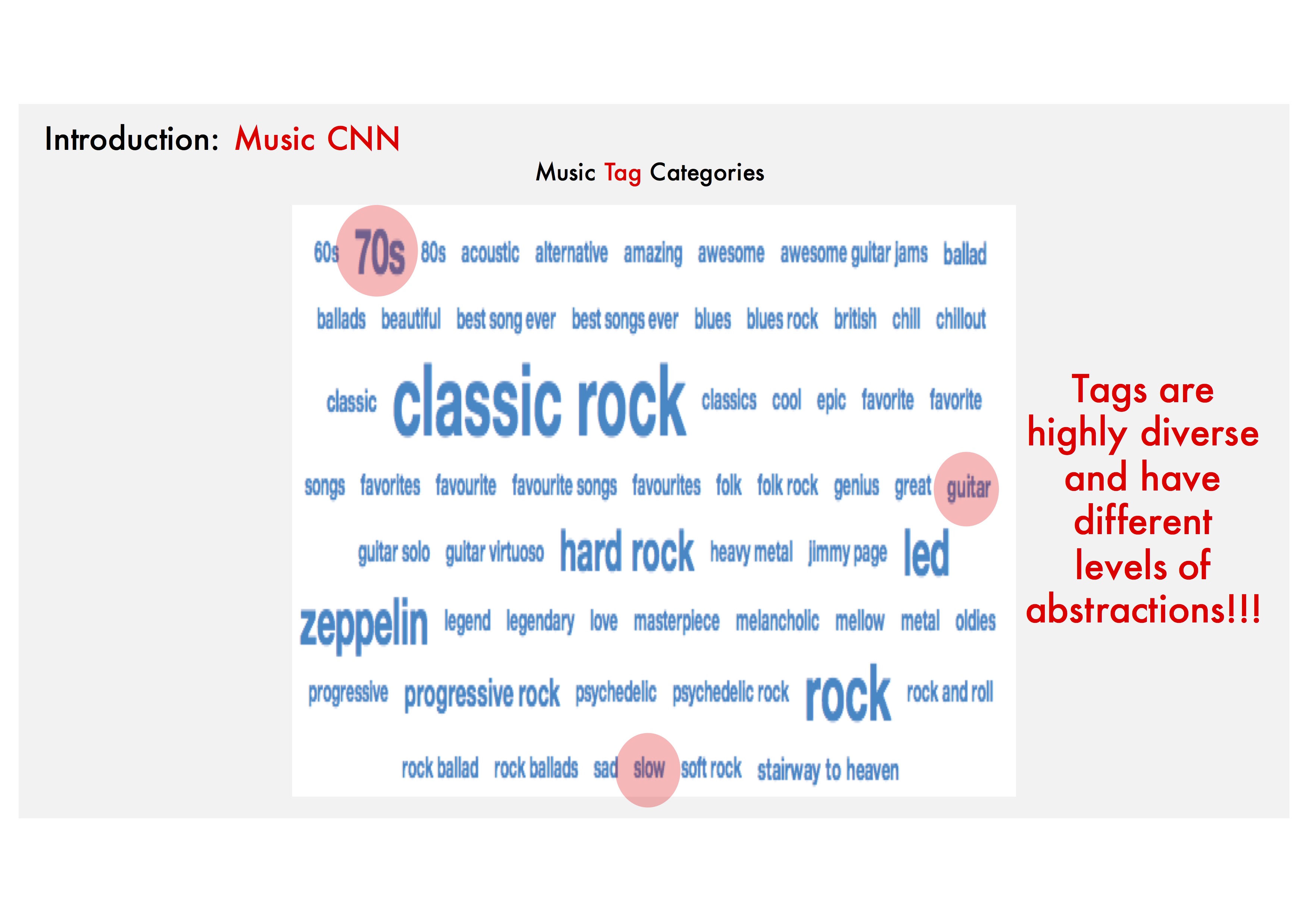

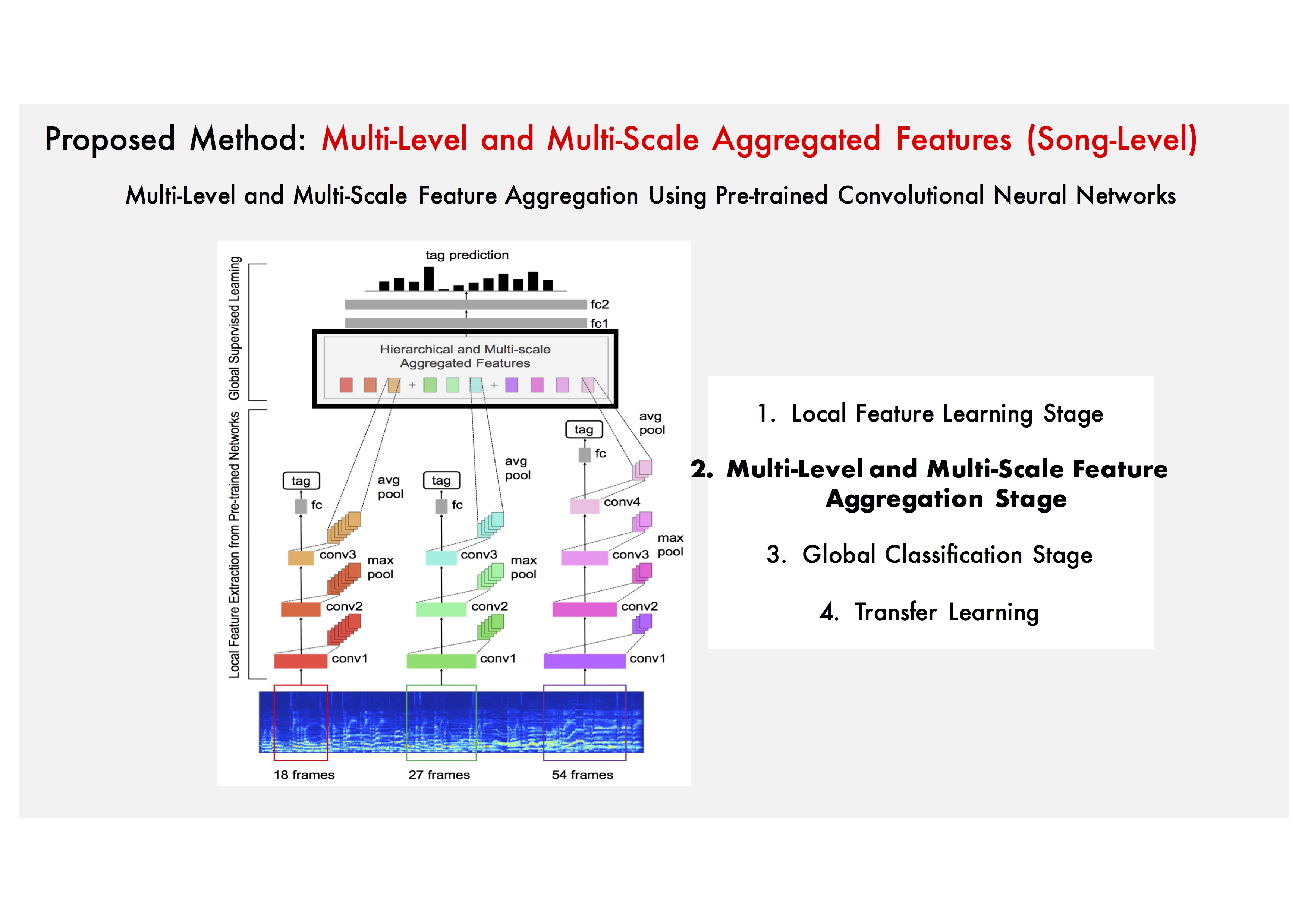

Music auto-tagging is often handled in a similar manner to image classification by regarding the 2D audio spectrogram as image data. However, music auto-tagging is distinguished from image classification in that the tags are highly diverse and have different levels of abstractions. Considering this issue, we propose a convolutional neural networks (CNN)-based Feature Aggregation Method that embraces multi-level and multi-scaled features.

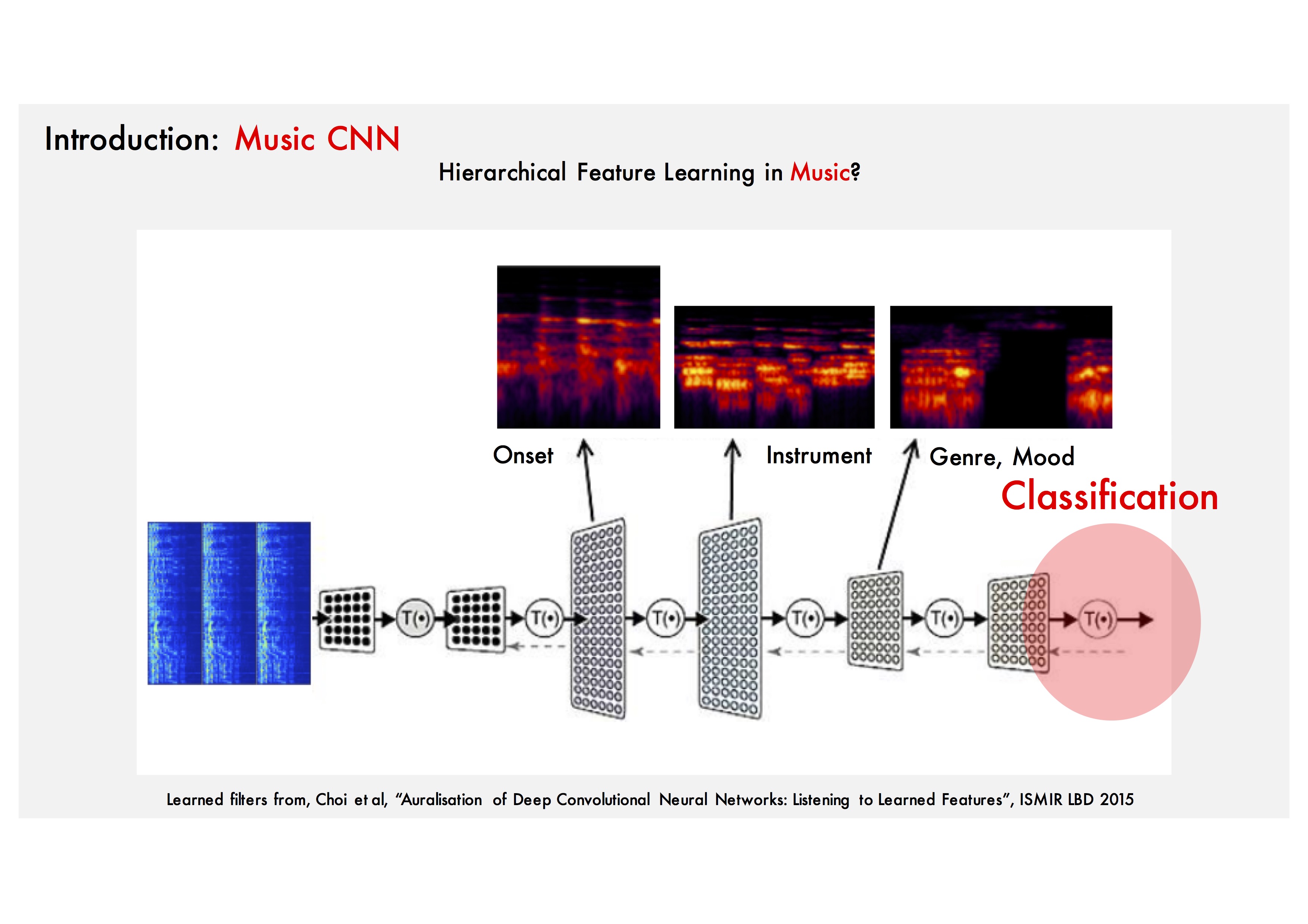

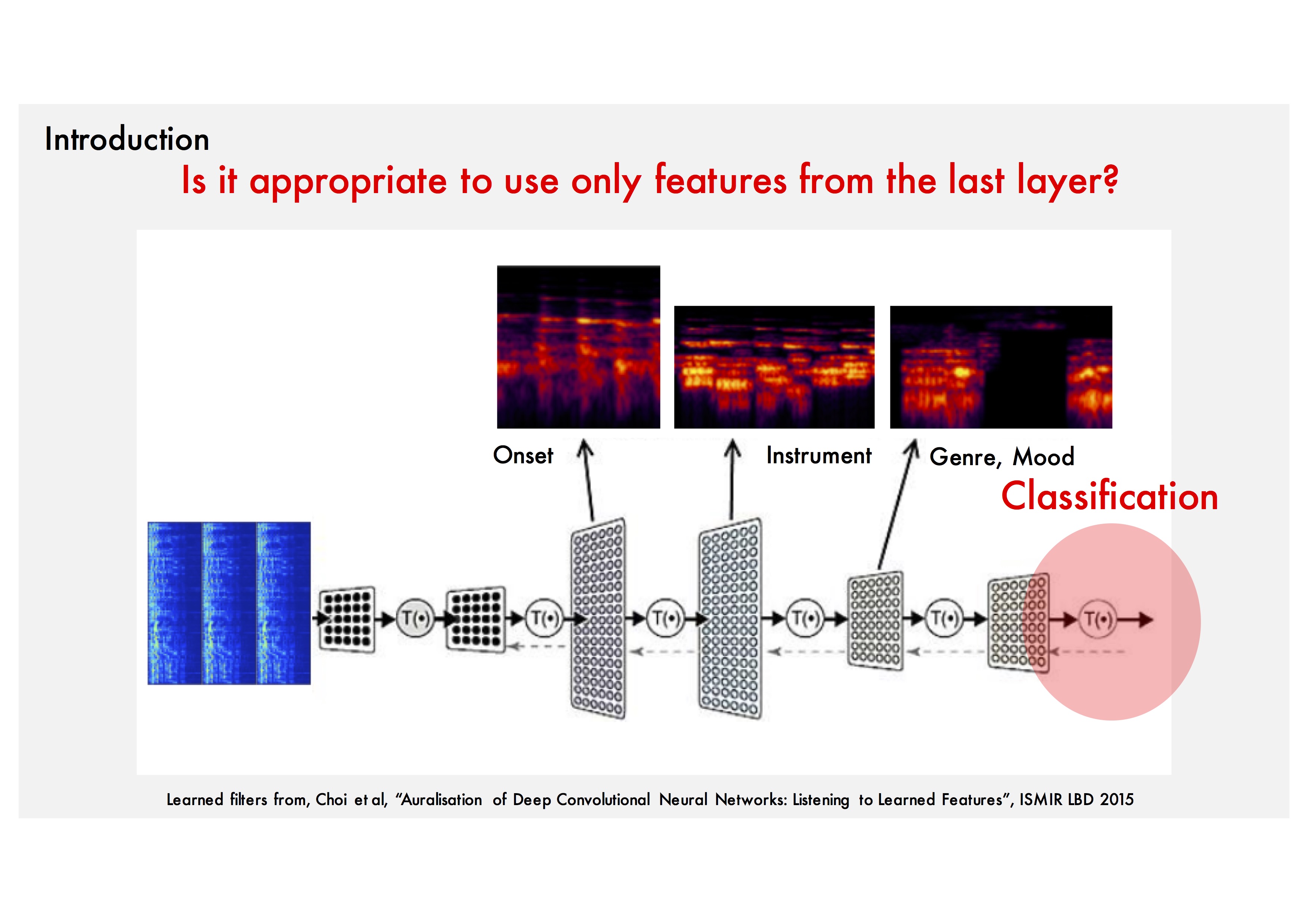

Motivation: Hierarchy in Music.

Motivation: Music tags with various levels of abstraction.

Motivation: Code music using CNN's last hidden layer?.

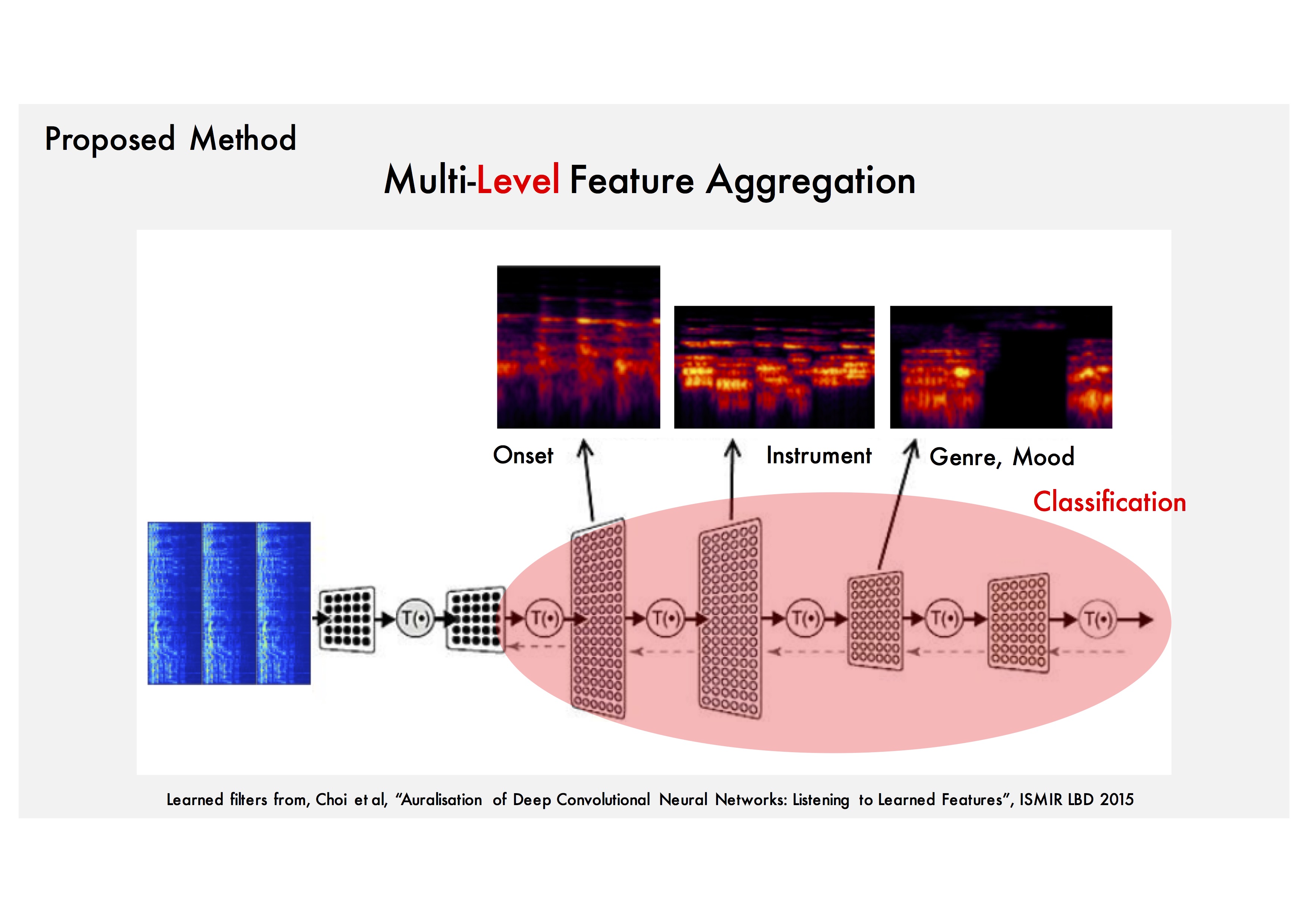

Motivation: Let's use the intermediate layer Features.

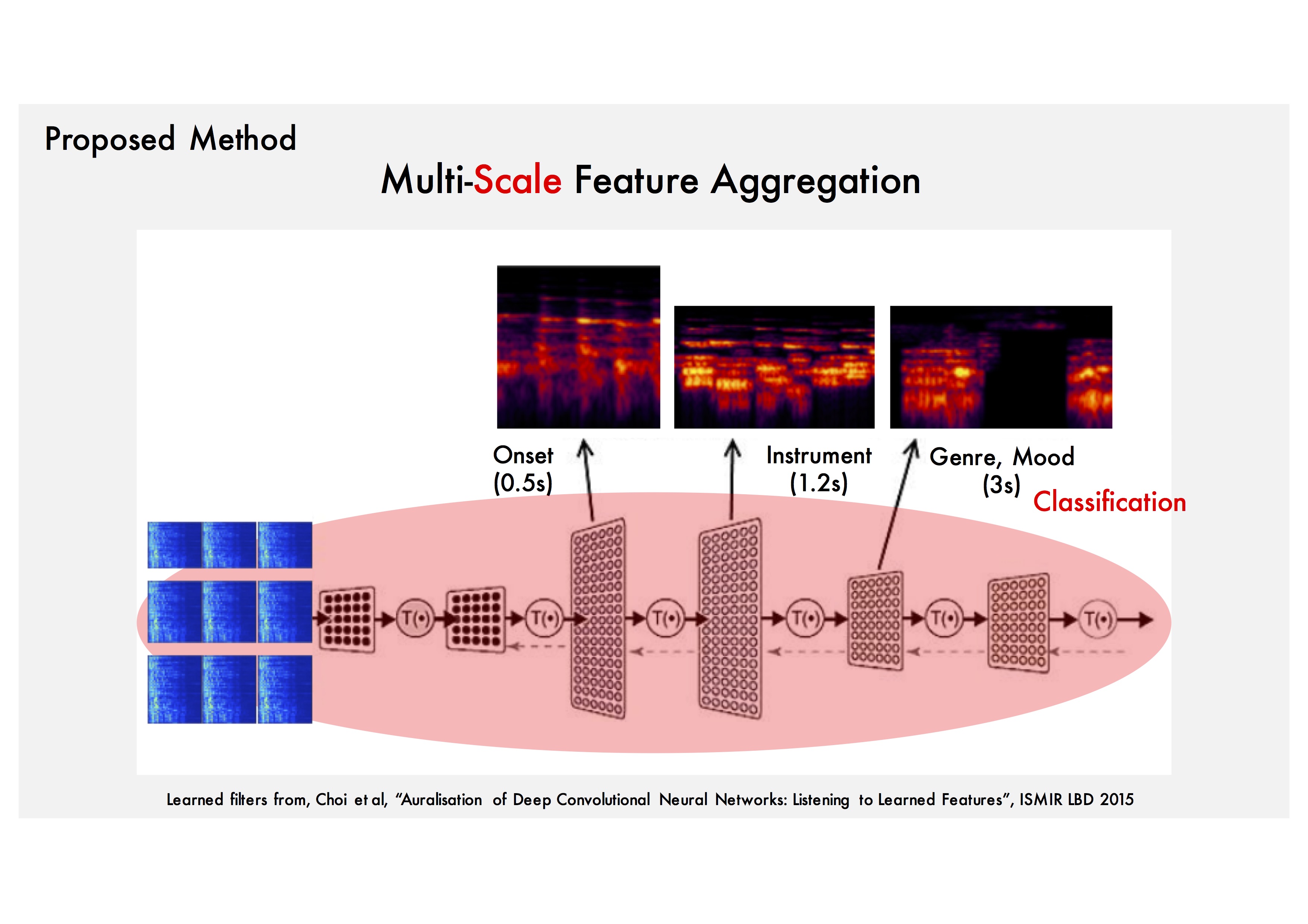

Motivation: We can also consider multi-scale Features by using several pre-trained CNN with different input sizes.

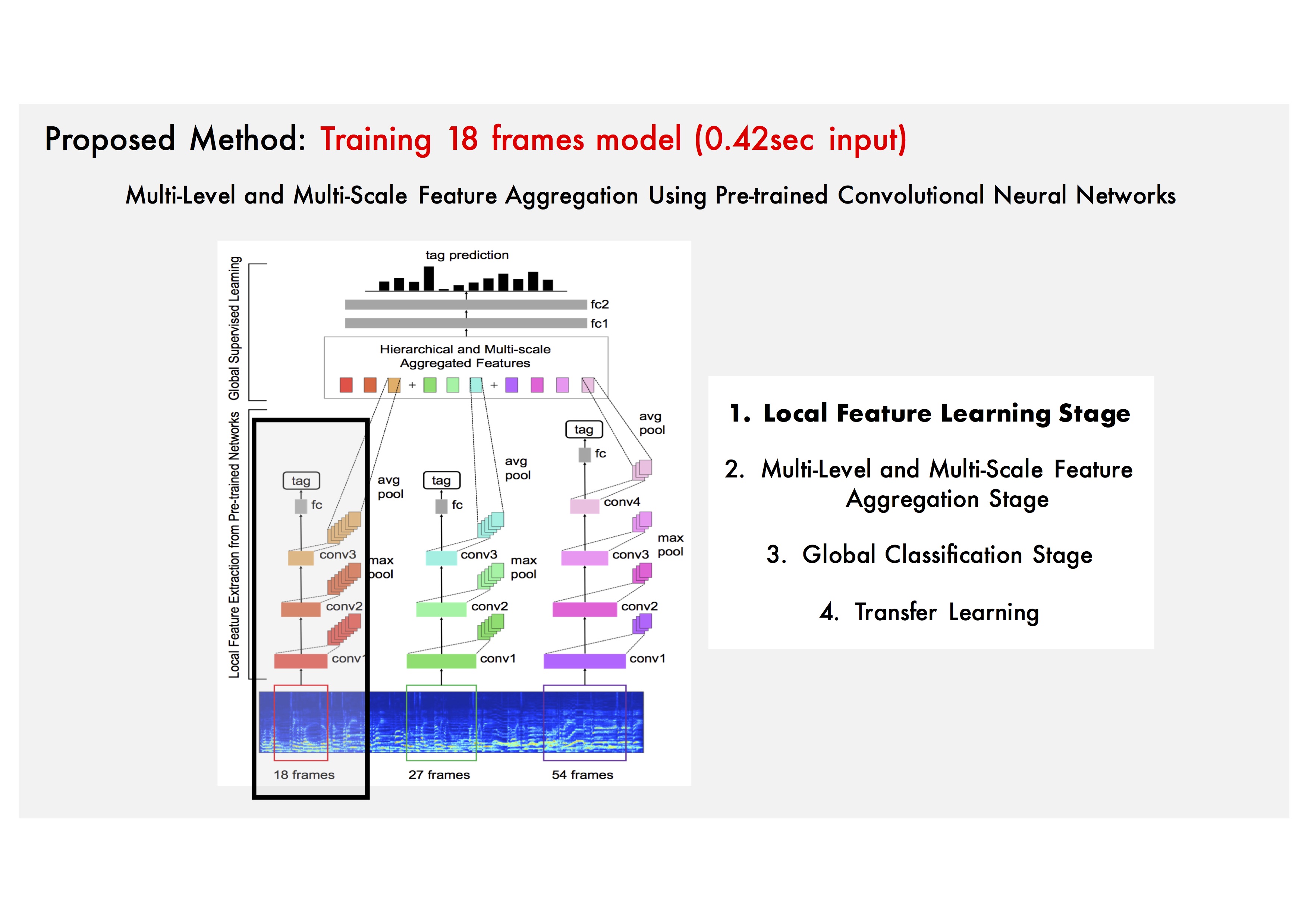

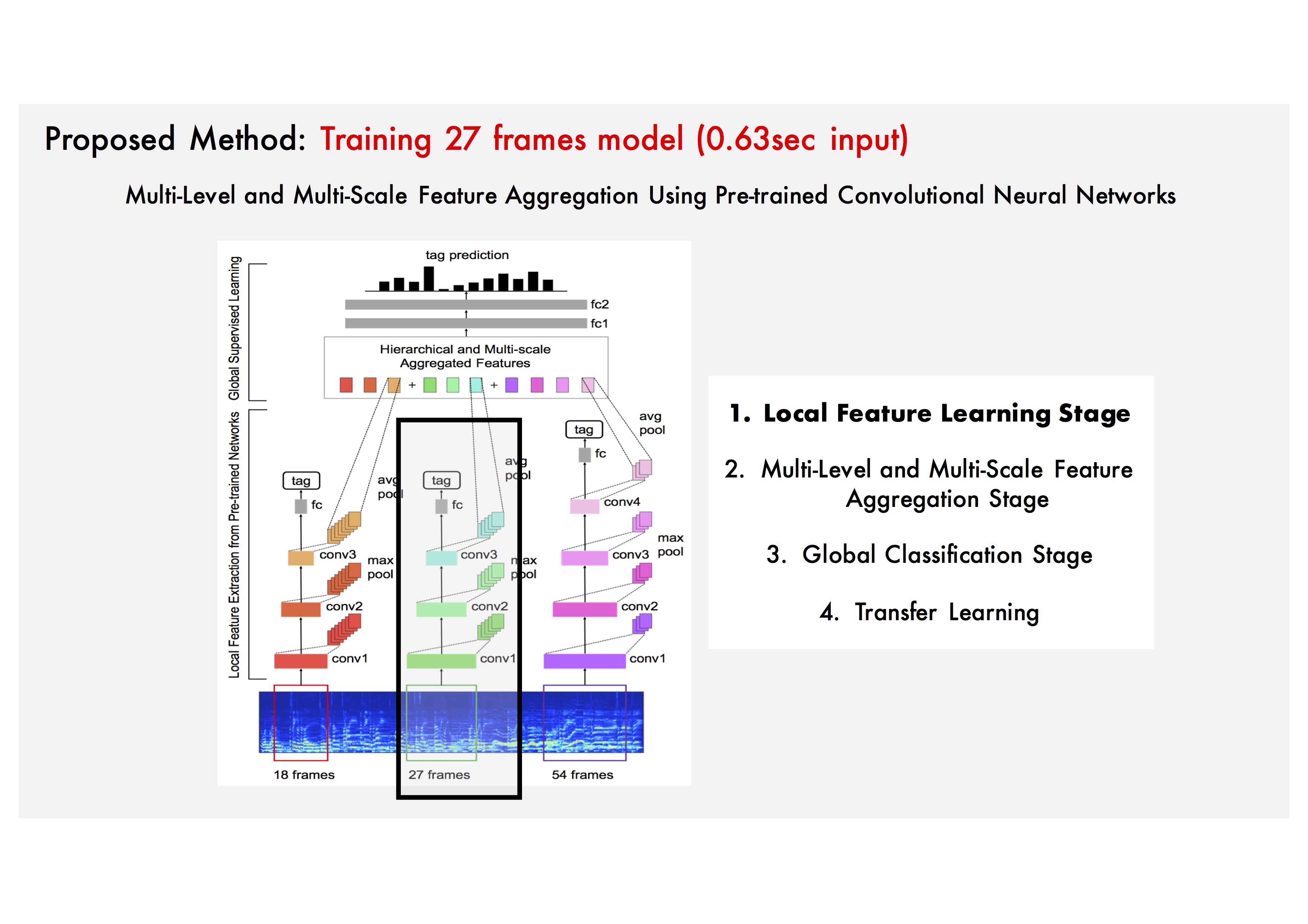

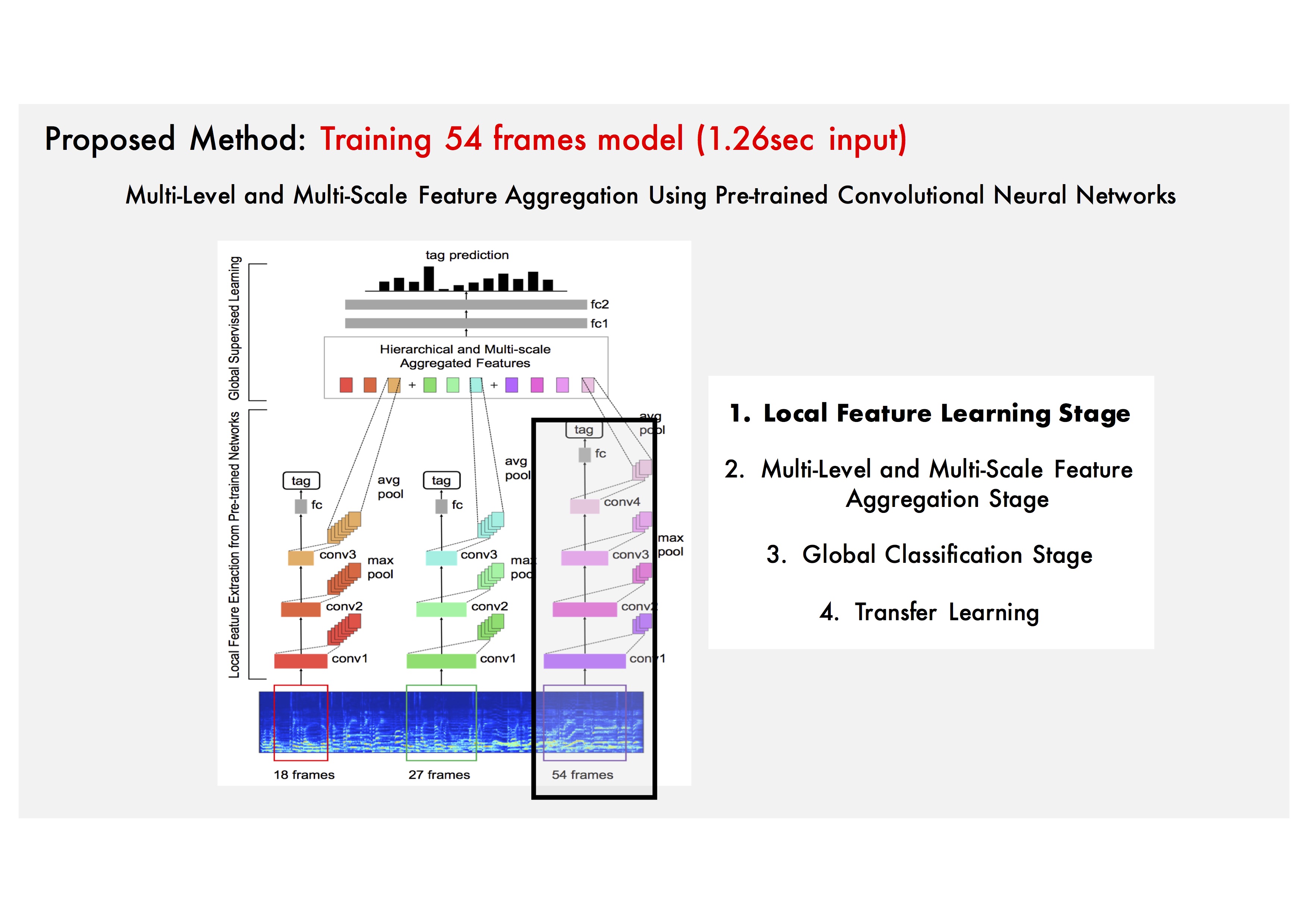

Pre-training: Let's make Feature extractors.

Pre-training: Let's make Feature extractors.

Pre-training: Let's make Feature extractors.

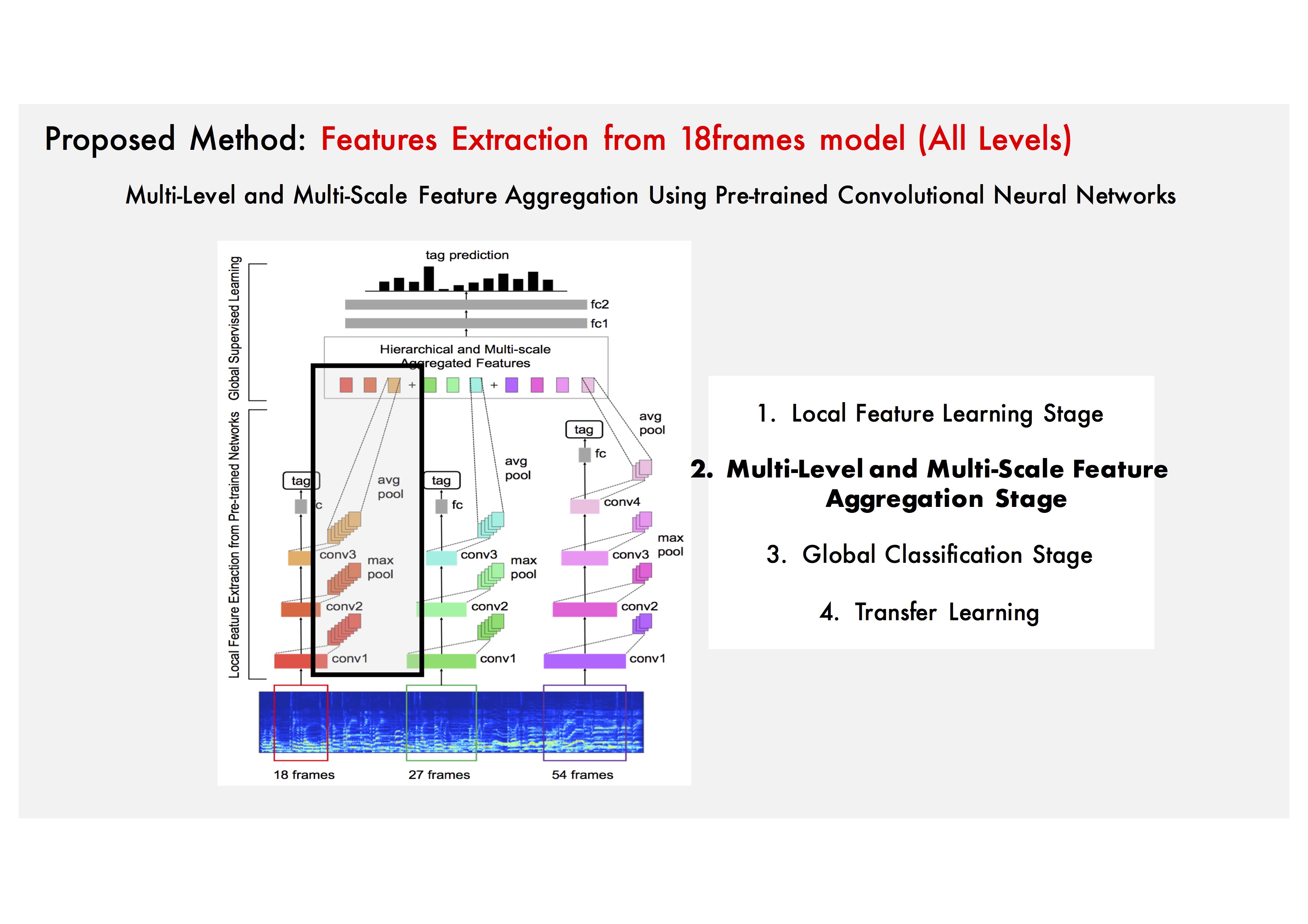

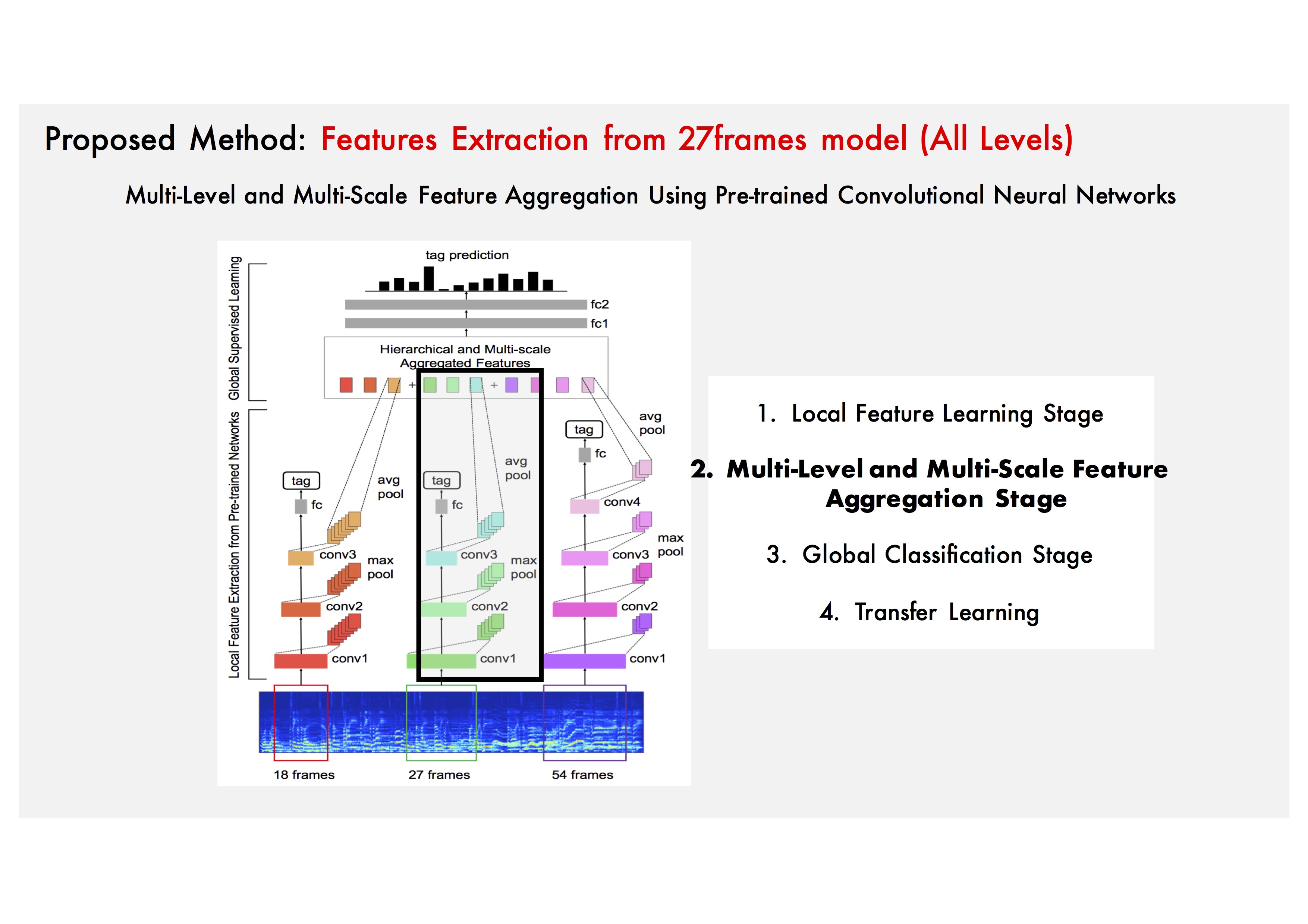

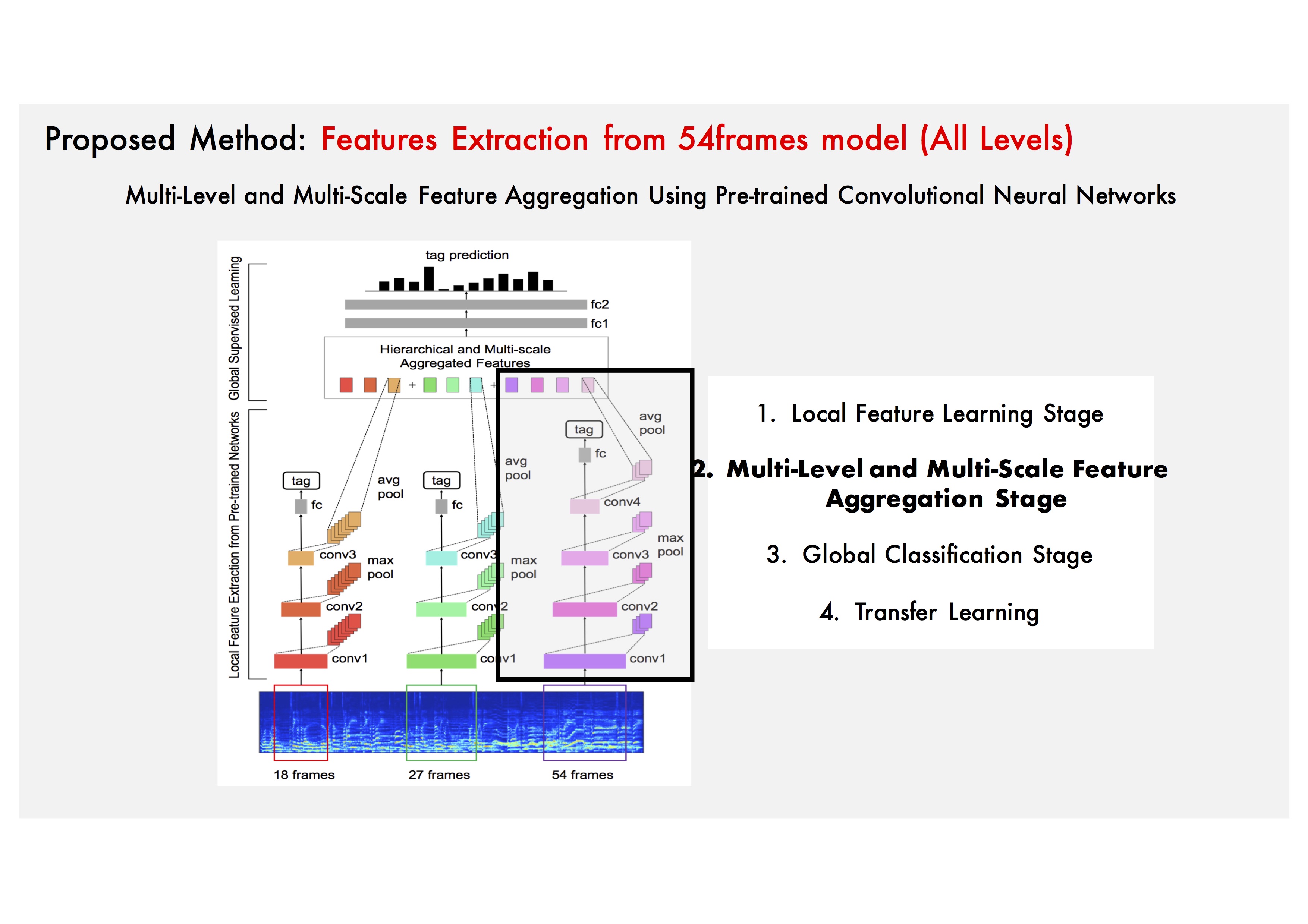

Feature extraction: To better capture local characteristics, frame-wise dimension is max-pooled to 1. After, average pooling is applied on whole segments of a song. Then, the feature size of each layer become equal to the number of filters on each layer.

Feature extraction: Another Feature Aggregation.

Feature extraction: Another Feature Aggregation in different scale.

Rich Features: Song-level Aggregated Features are now obtained.

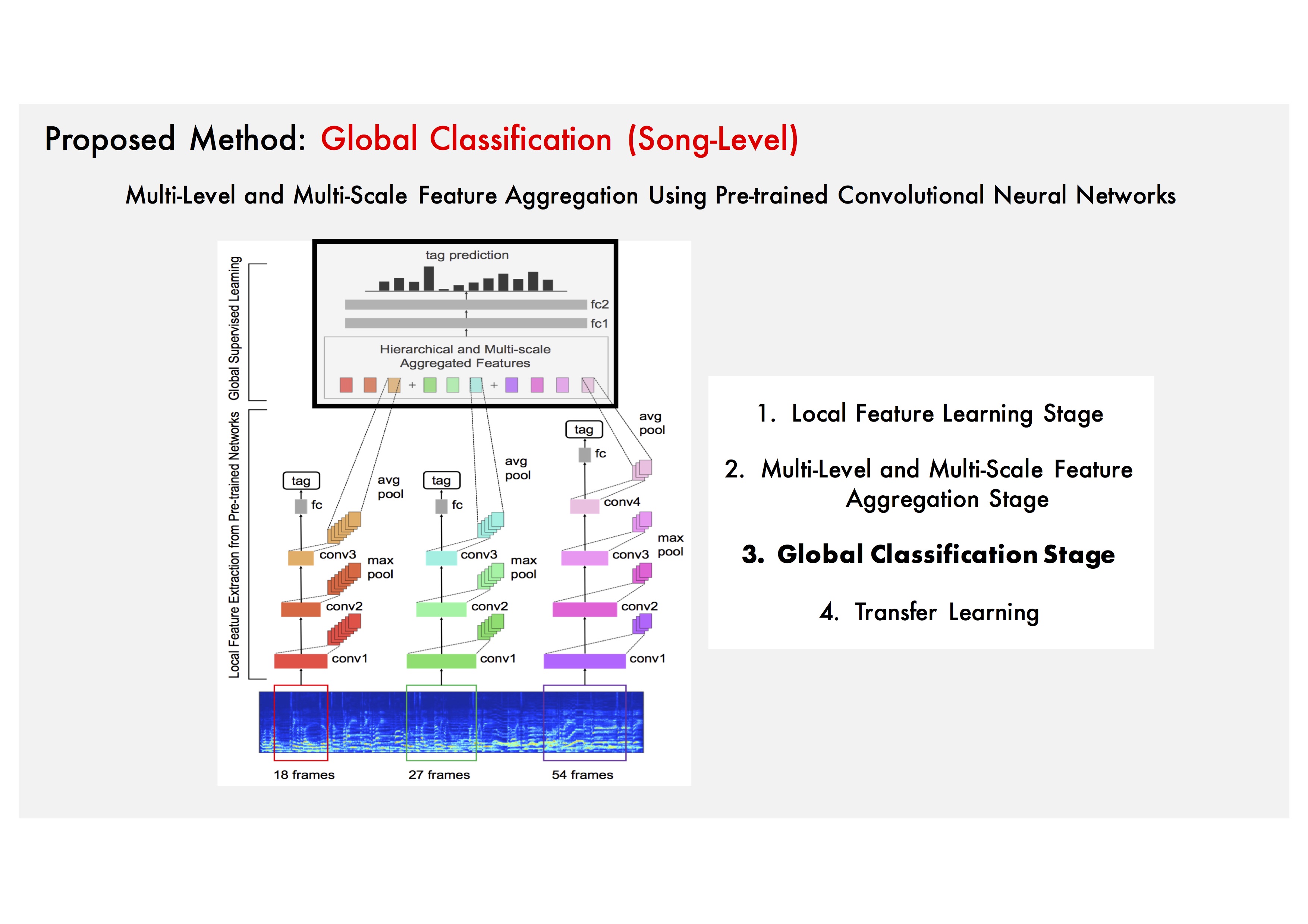

Classification: Classify using DNNs.

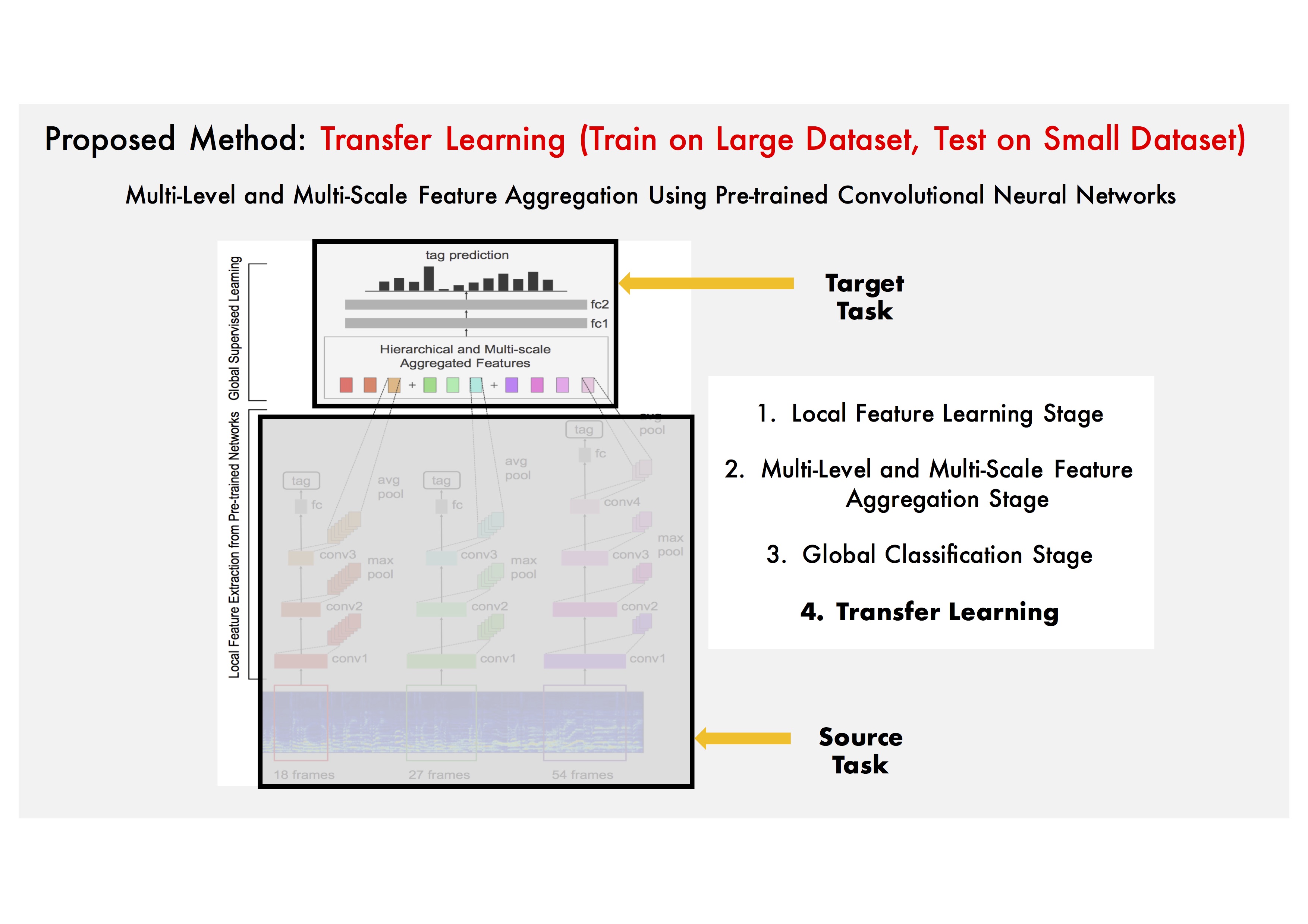

Transfer Learning: Since our method consist of two stages, transfer learning is easily applied.

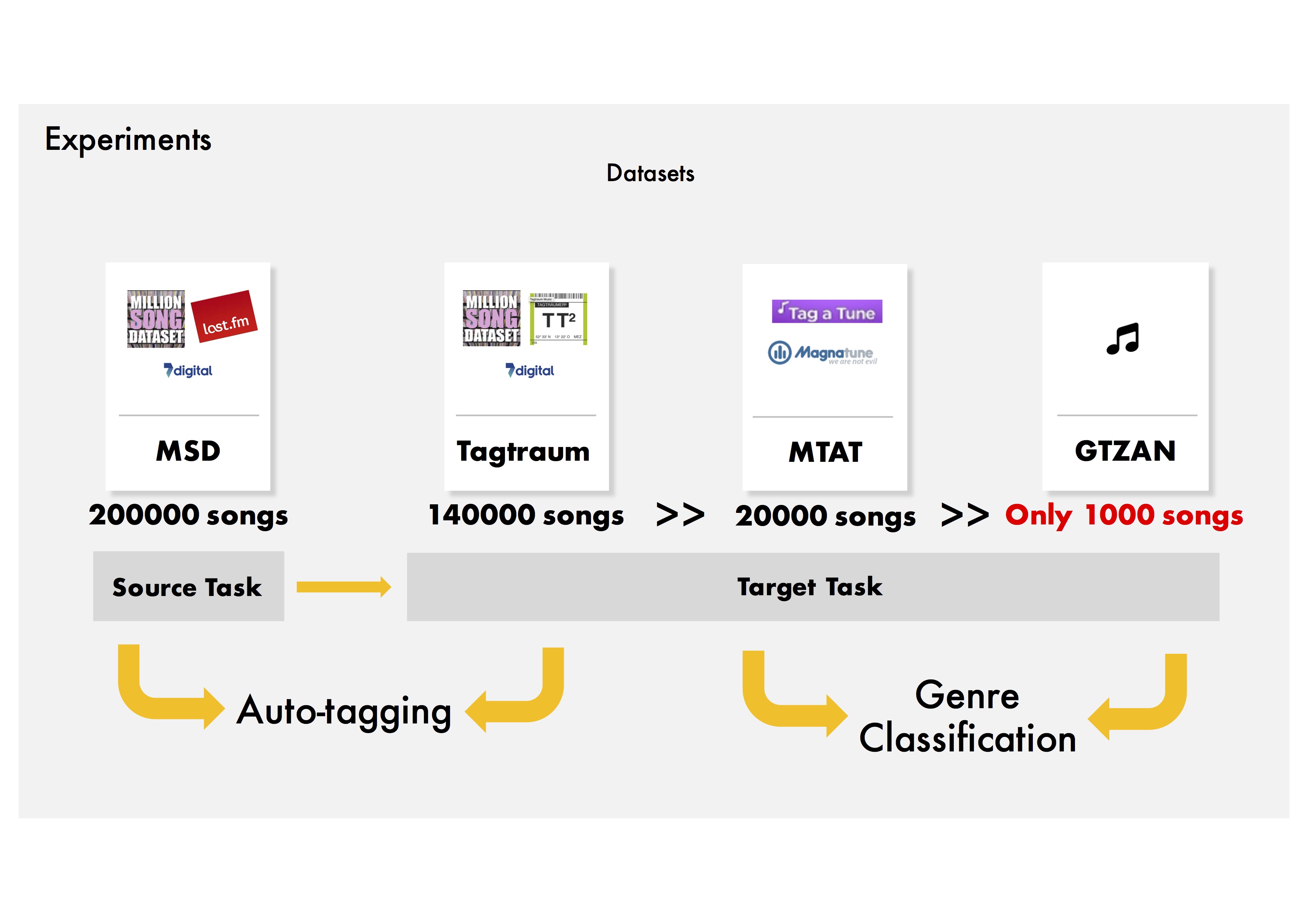

Datasets: MSD, Tagtraum, MTAT, GTZAN.

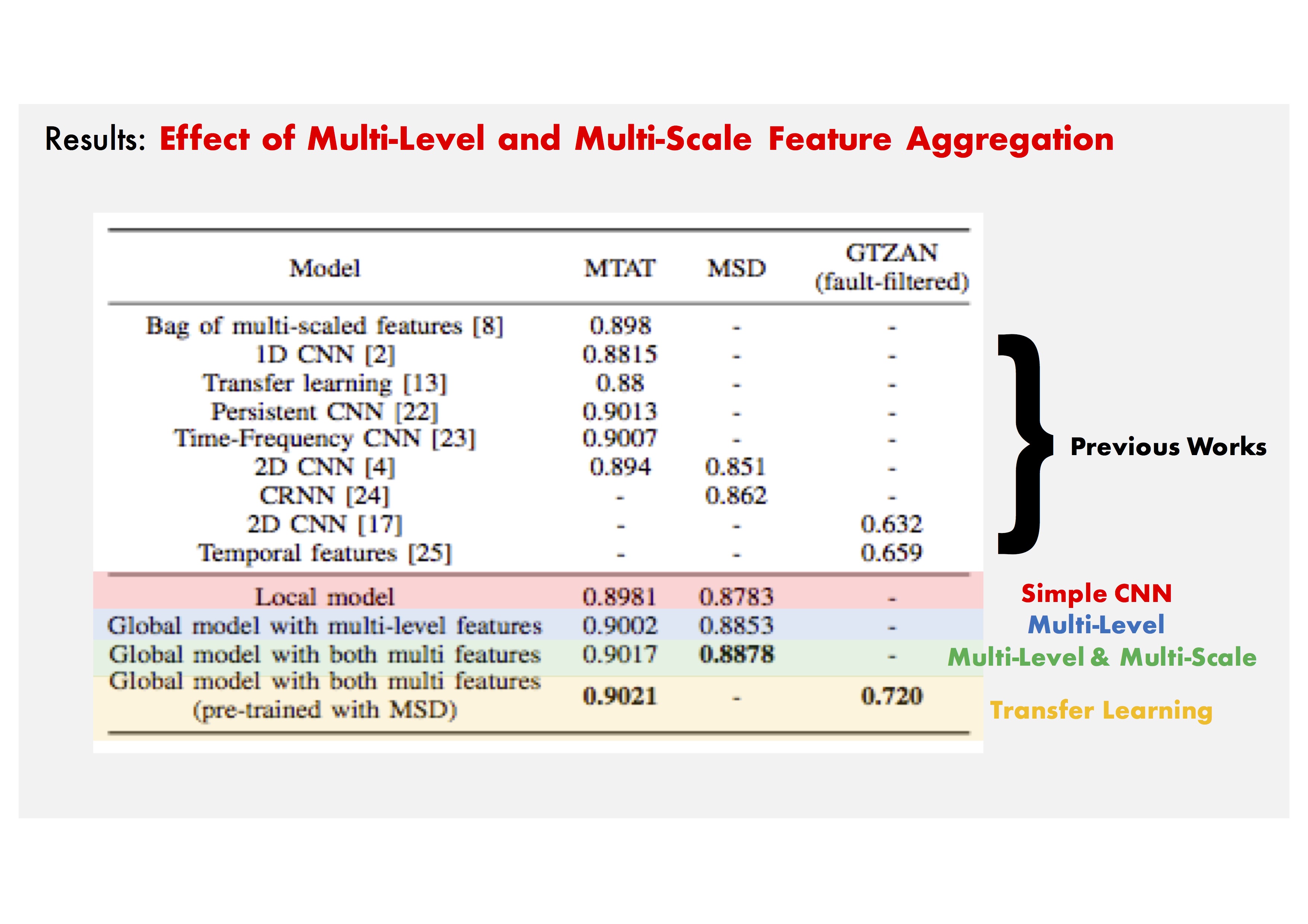

Results: Comparisons.

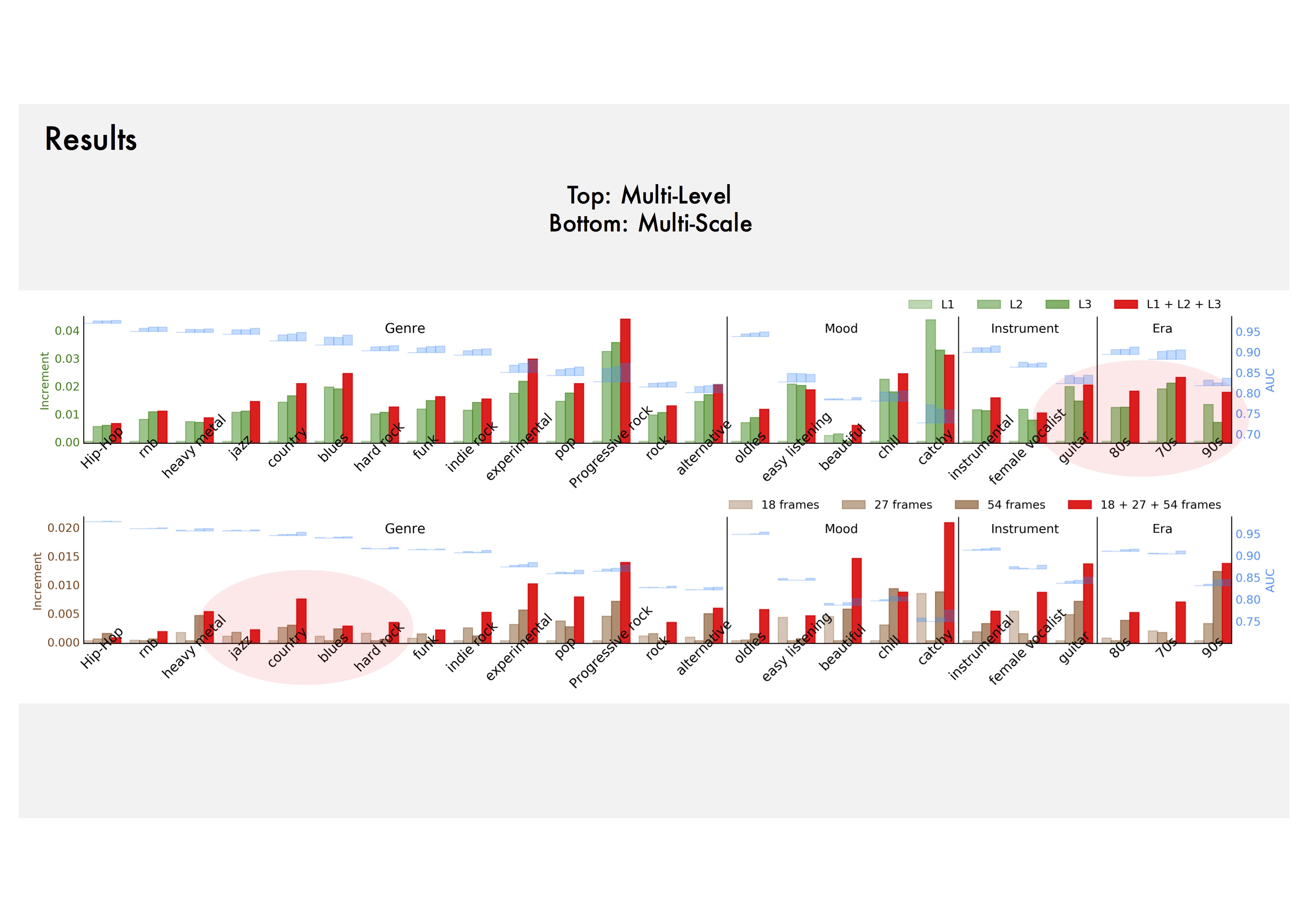

Analysis: We can see that some tags are indeed located at different levels and scales.

Check out the paper for more info.